TWC #7

SOTA updates between 12 Sept– 18 Sept 2022

- Learning with noisy labels

- Semi-supervised image classification

- Domain adaptation

- Few shot image classification

This post is a consolidation of daily twitter posts tracking SOTA changes.

Official code release (with pre-trained models in most cases) also available for these tasks.

Special Update this week

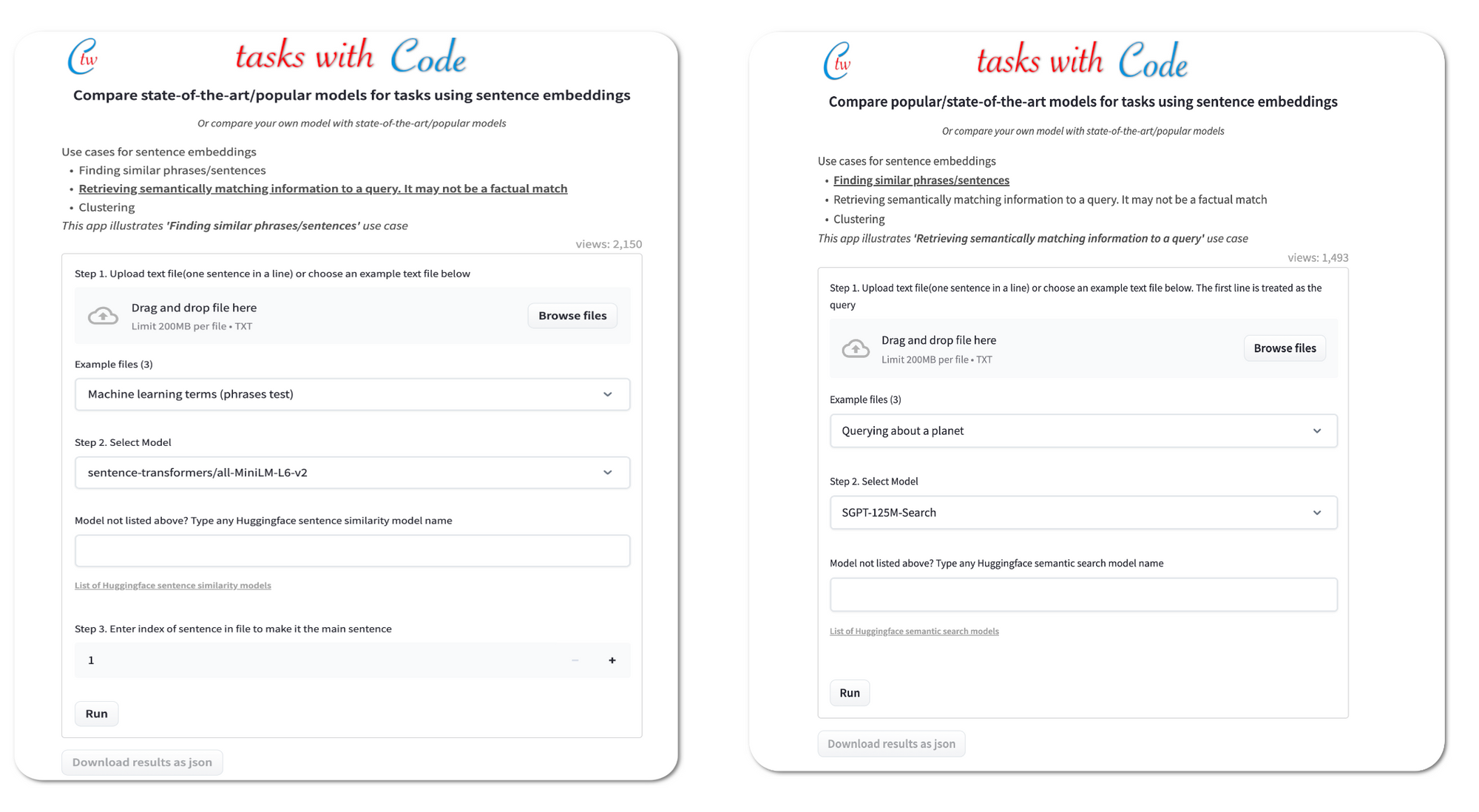

We released a couple of apps for easily comparing models for sentence similarity and semantic search tasks. The apps make it easy to compare multiple models in one interface. We can compare both SOTA model and the popular models (> 500k downloads) on Huggingface. We can either upload a custom file (with up to 100 sentences) or choose from example files that are provided. The sentence similarity app allows any line in a file to be the main sentence for comparing with other sentences in file. The results from both apps can be downloaded for further analysis.

#1 in learning with noisy labels on 4 CIFAR family datasets

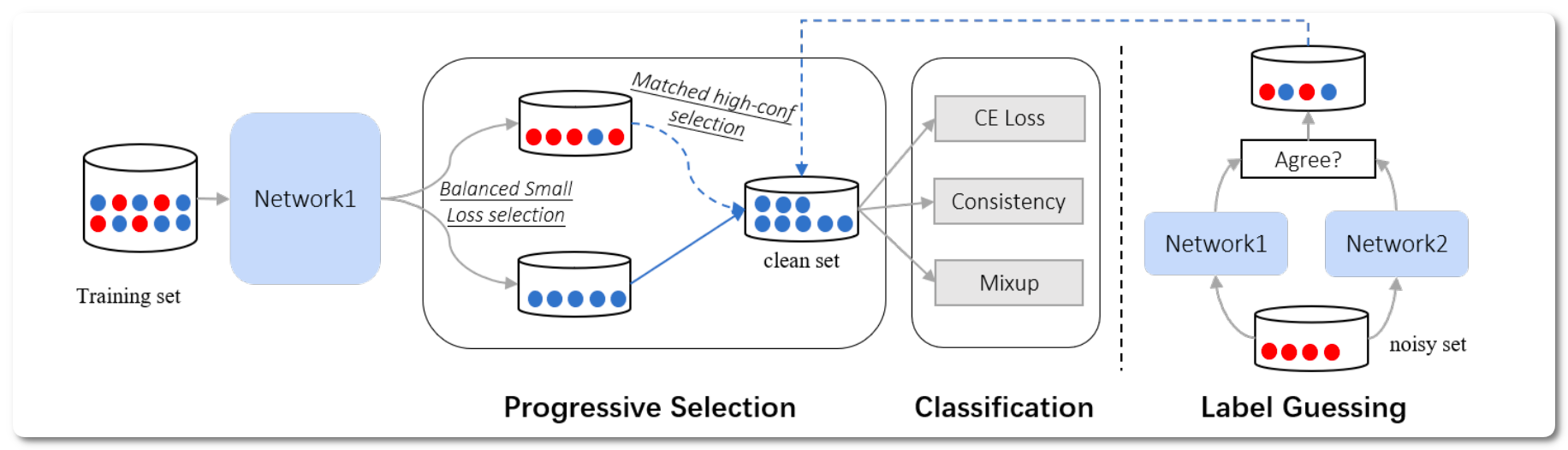

Paper: ProMix: Combating Label Noise via Maximizing Clean Sample Utility

Github code released by Haobo Wang (first author in paper) Model link: Not released to date.

Submitted on 22 July 2022. Code updated 5 July 2022

Notes: This paper a novel noisy label learning framework that attempts to maximize the utility of clean samples for boosted performance. A matched high-confidence selection technique selects those examples having high confidence and matched prediction with its given labels.

Model Name: ProMix

License: None to date

Demo page link? None to date

#1 in semi-supervised image classification on 2 CIFAR datasets

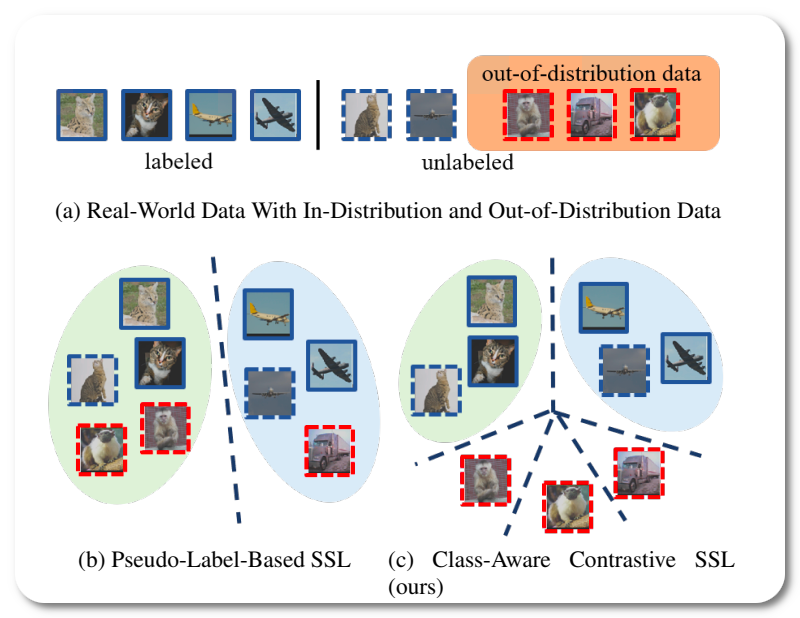

Paper: Class-Aware Contrastive Semi-Supervised Learning

Github code released by Kai Wu (second author in paper) Model link: Not released to date.

Submitted on 4 March 2022 (v1), last revised 9 Sept 2022 (v3) . Code updated 9 Sept 2022

Notes: The paper proposes a general method which is a drop-in helper to improve the pseudo-label quality and enhance the model's robustness in the real-world setting. Rather than treating real-world data as a union set, the method separately handles reliable in-distribution data with class-wise clustering for blending into downstream tasks and noisy out-of-distribution data with image-wise contrastive for better generalization

Model Name: CCSSL(FixMatch)

Model links. Models not released to date

License: Non-commercial use

Demo page link? None to date

#1 in Domain Adaptation on ImageCLEF-DA & top 10 in 3 other datasets

Github code released by Lin Chen (first author in paper) Model link: Not released to date.

Submitted on 8 April 2022. Code last updated on 14 Sept 2022

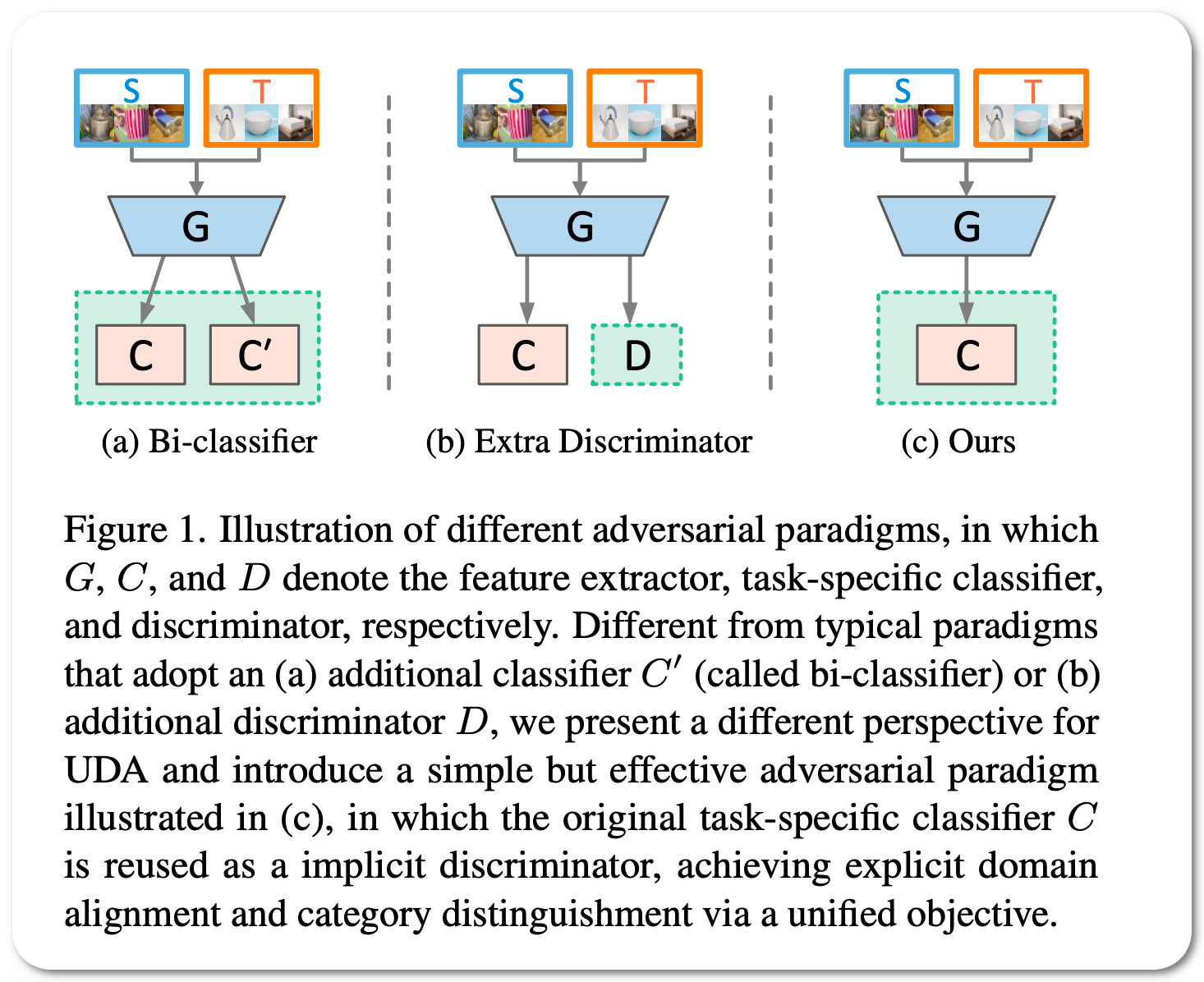

Notes: This paper propose an adversarial paradigm in the form of a discriminator-free adversarial learning network (DALN), wherein the category classifier is reused as a discriminator, which achieves explicit domain alignment and category distinguishing through a unified objective, enabling the model to leverage the predicted discriminative information for sufficient feature alignment.

Model Name: MCC+NWD

Score (↑) : 90.7 (Prev:90.4)

Δ: .3 (Metric: accuracy)

Model links. Model not released yet

License: Not listed

Demo page link? None to date

#1 in Few shot image classification on Meta-Dataset

Github code released by Shell Xu Hu (first author in paper) Model link: Not released to date.

Submitted on 15 April 2022. Code last updated on 8 June 2022

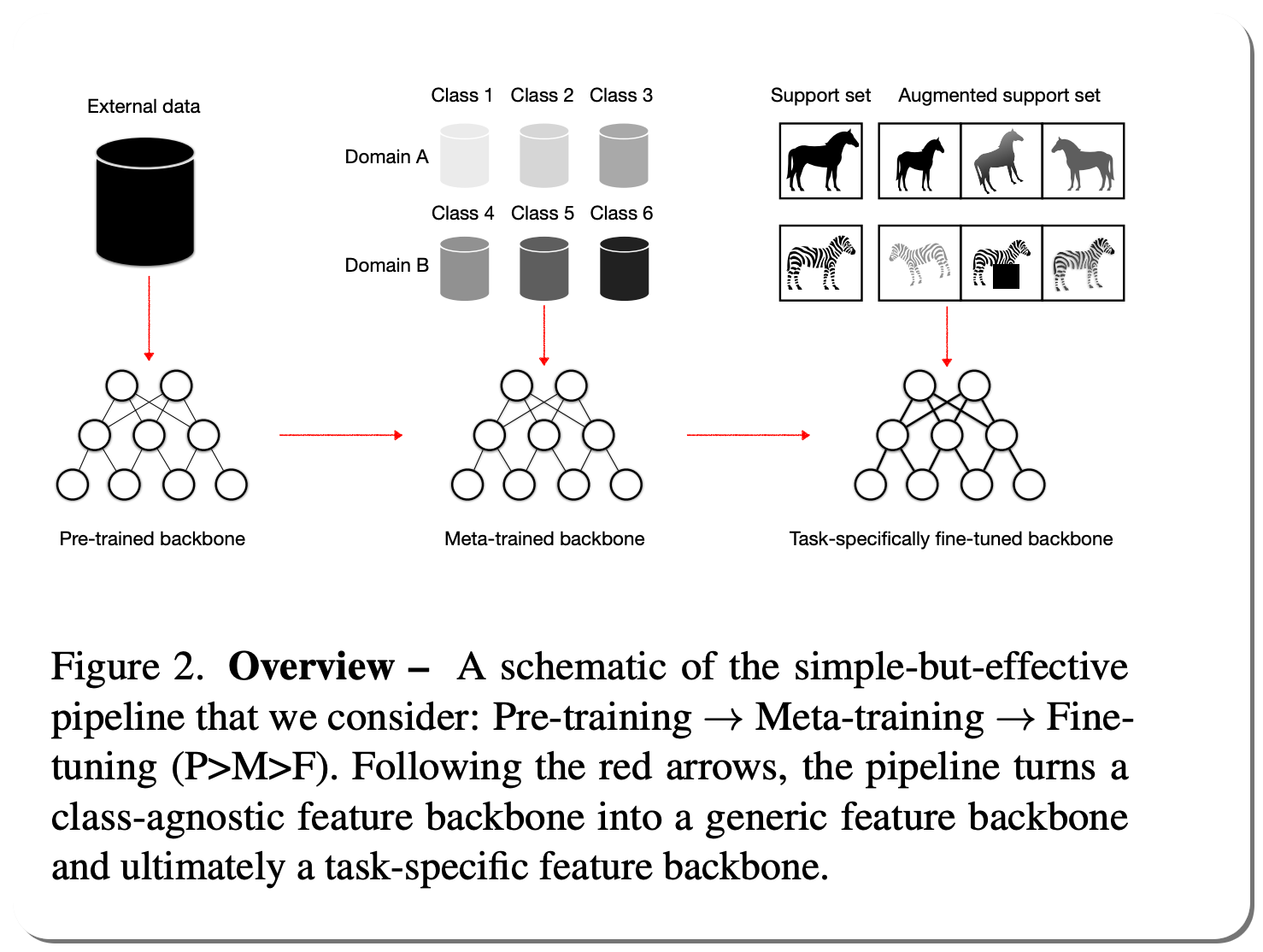

Notes: This paper propose a pipeline for more realistic and practical settings of few-shot image classification. It examines few-shot learning from the perspective of neural network architecture, as well as a three stage pipeline of network updates under different data supplies.

Code: Github link

Model Name: P>M>F

Score (↑) : 84.75 (Prev:78.07)

Δ: 6.68 (Metric: accuracy)

Model links. Model not released yet

License: Not listed

Demo page link? None to date

#1 in few shot image classification on CIFAR-FS 5-way (5-shot)

Paper: The Self-Optimal-Transport Feature Transform

Github code released by Daniel Shalam (first author in paper) Model link: In github page

Submitted on 6 April 2022. Code last updated on 28 Aug 2022

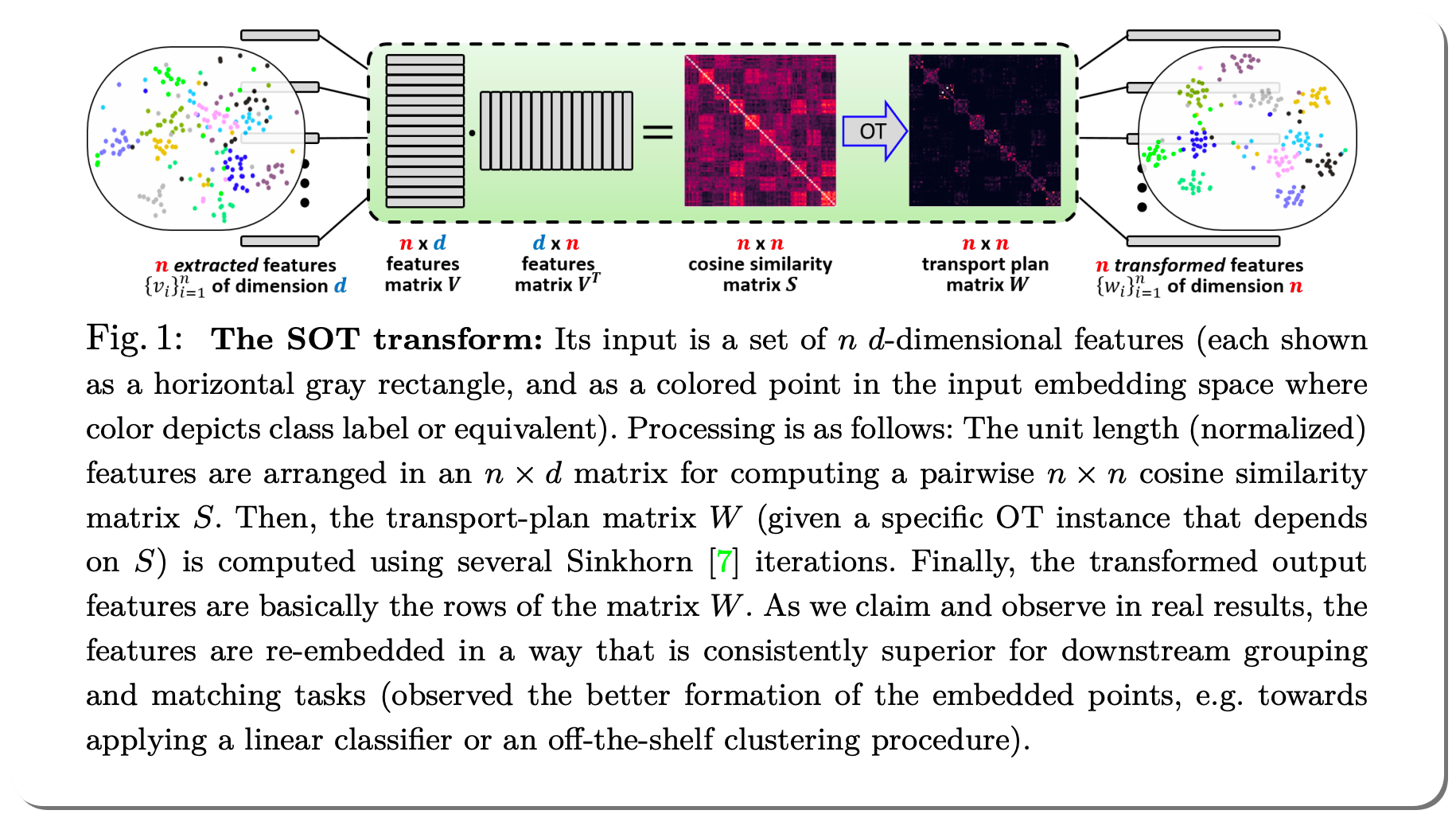

Notes: This paper attempts to improve the set of features of a data instance to facilitate downstream matching or grouping related tasks. The transformed set encodes a rich representation of high order relations between the instance features. Distances between transformed features capture their direct original similarity and their third party agreement regarding similarity to other features in the set.

Model Name: SOT(transductive)

Score (↑) : 93.83 (Prev:92.2)

Δ: .63 (Metric: accuracy)

License: Not listed

Demo page link? None to date

#1 in few-shot image classification on Tiered ImageNet 5-way (5-shot)

Paper: Attribute Surrogates Learning and Spectral Tokens Pooling in Transformers for Few-shot Learning

Github code released by Weihan Liang ( author in paper) Model link: In github page

Submitted on 17 March 2022. Code last updated on 15 Aug 2022

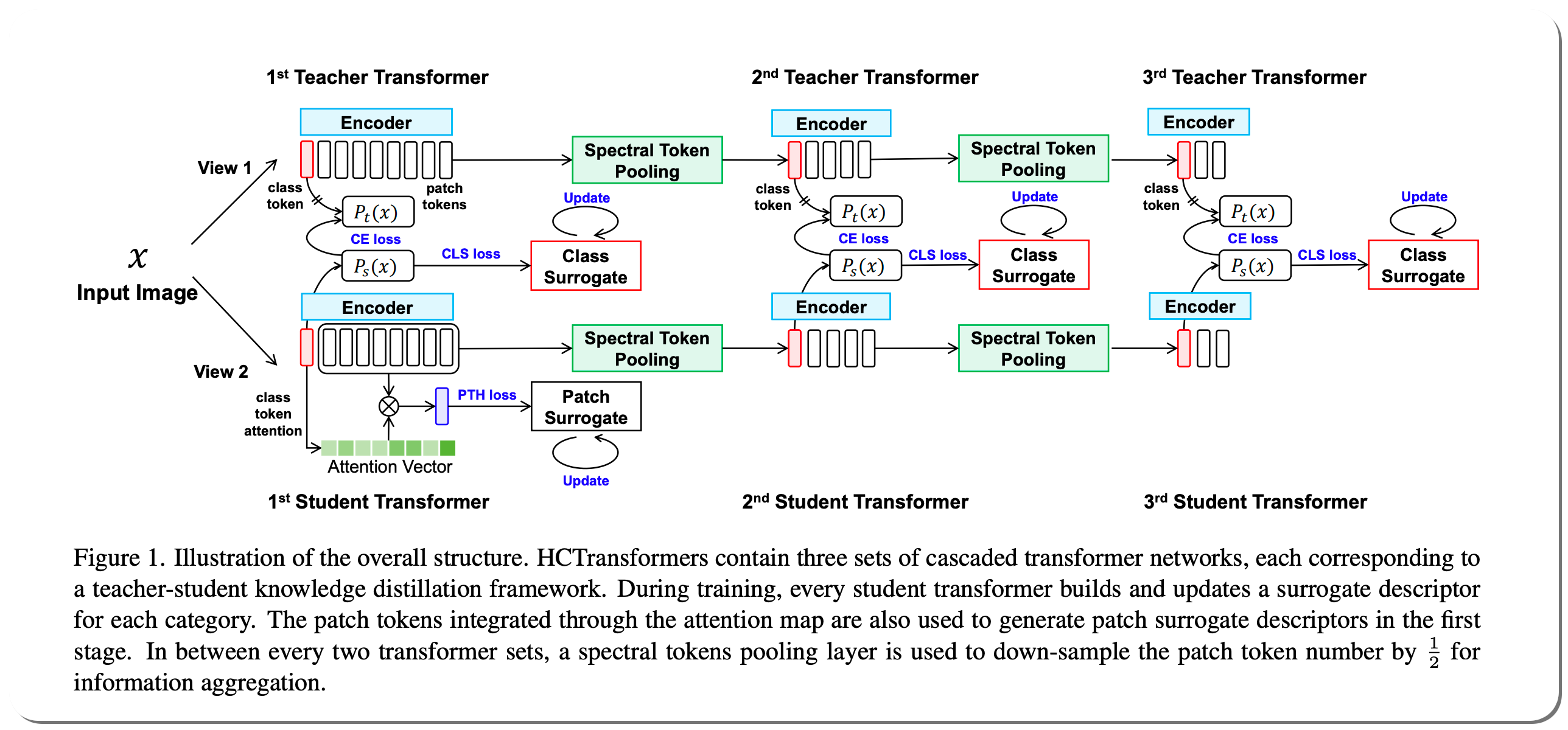

Notes: This paper presents new hierarchically cascaded transformers that can improve data efficiency through attribute surrogates learning and spectral tokens pooling. This paper addresses a deficiency of vision transformers when there is no sufficient data - it gets stuck in overfitting and shows inferior performance. To improve data efficiency, the paper proposes a hierarchically cascaded transformers that exploit intrinsic image structures through spectral tokens pooling and optimize the learnable parameters through latent attribute surrogates

Model Name: HCTransformers

Score (↑) : 91.72 (Prev:91.09)

Δ: .63 (Metric: accuracy)

Model links. Models in github link

License: Apache license granting permission for commercial use

Demo page link? None to date